Nudging is a form of social engineering — a way of designing system constraints and support structures to encourage the majority of people to behave in accordance with your plan.

Here’s a famous-in-my-classroom example of nudging:

Opt-in versus Opt-out Consent Solutions

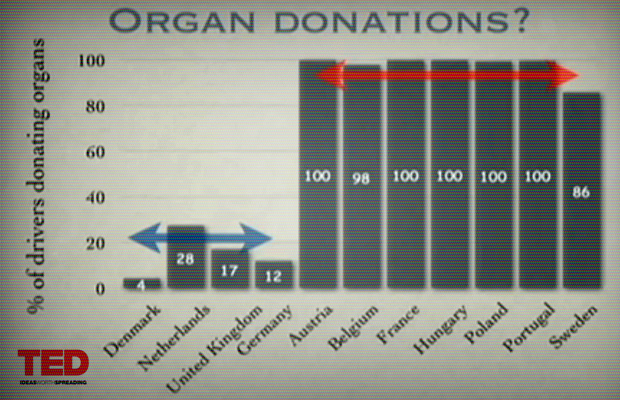

There are many examples of such social engineering. During our breakout groups at the NIH think tank on the future of citizen participation in biomedical research, I raised the difference between opt-in versus opt-out option results for organ donation. In some countries in Europe, citizens have to opt-out from donating their organs in a case of a tragic accident — they have to do something to NOT donate their organs. As the result in Austria — which has an opt-out system — the donation rate is 99.98%! While in Germany — which has an opt-in system — only 12% will their organs for transplants. This is a huge difference in consent between very similar populations of people.

Unintended Consequences of Social Design

Not all social engineering efforts go as well as opt-in/opt-out organ donation systems. To reduce pollution for the 2008 Summer Olympic Games in Beijing, the Chinese government established the even/odd license plate law: cars with even license plate numbers alternated with cars with odd license plate numbers for days they were allowed on roads. It seemed a simple and ingenious solution to too many cars… But ingenuity is not a sole purview of government agencies. As quickly as the law was established, people found a way around it — just get two cars, one with even and one with odd license plates. Thus instead of reducing the number of cars, the law actually spurred the purchase of more cars, and it didn’t even really reduce the number of cars on the road.

Changing How People Think About Problems

Getting individuals to donate their medical records together with their genetic information is a difficult task, one that will require some careful social engineering. But it is not an impossible task. We change our minds all the time. What is culturally and socially acceptable one moment in history is completely insane at some other.

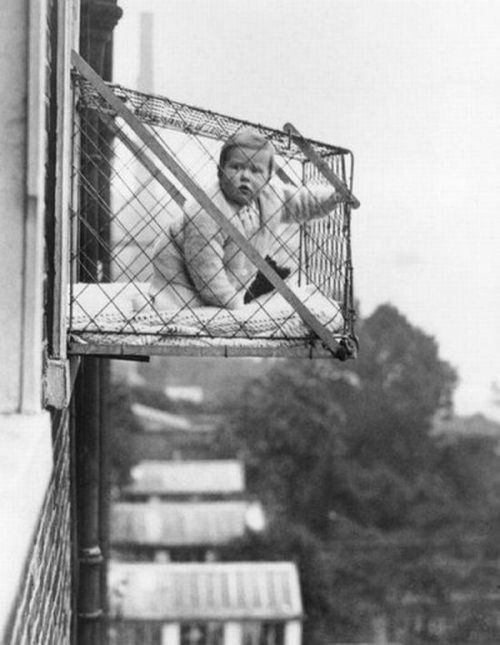

Parents have believed that fresh air is an important component of health for young children for some time now (although, this was NOT the case always). When there was a large migration into cities and back yards were no longer a norm of childhood, parents invented other ways of giving their sunshines a little sun shine.

The photo above would flood the police hot lines now, but back in 1937 when it was taken, it was just a great solution to city-living problem.

I think that once the health insurance barriers are gone, the moral imperative of driving the biomedical research to save lives will overcome the resistance people have about their data privacy. The privacy argument is great for organizations that want to sell data to others (e.g. Google), but it is not a great civics argument. To change people’s minds, though, there will need to be a concerted educational campaign that explains the issue. And each person needs to feel like they are participating in a project that is meaningful; that is important; with each contribution being valuable; etc. Each person needs to feel like they are part of IT (and not outside of IT, the way it works now). When each person is seen as a citizen scientist by all, then the data is for everyone to share.

This is a cultural shift — a shift in a power structure of research. This is an empowerment movement. We shouldn’t think of “giving up privacy” but of “gaining the power” over our data.

Opt-out consent might be a good nudge in this direction, for both the research organizations and for private citizens.